AMD Unveils Instinct MI350X Series AI GPU

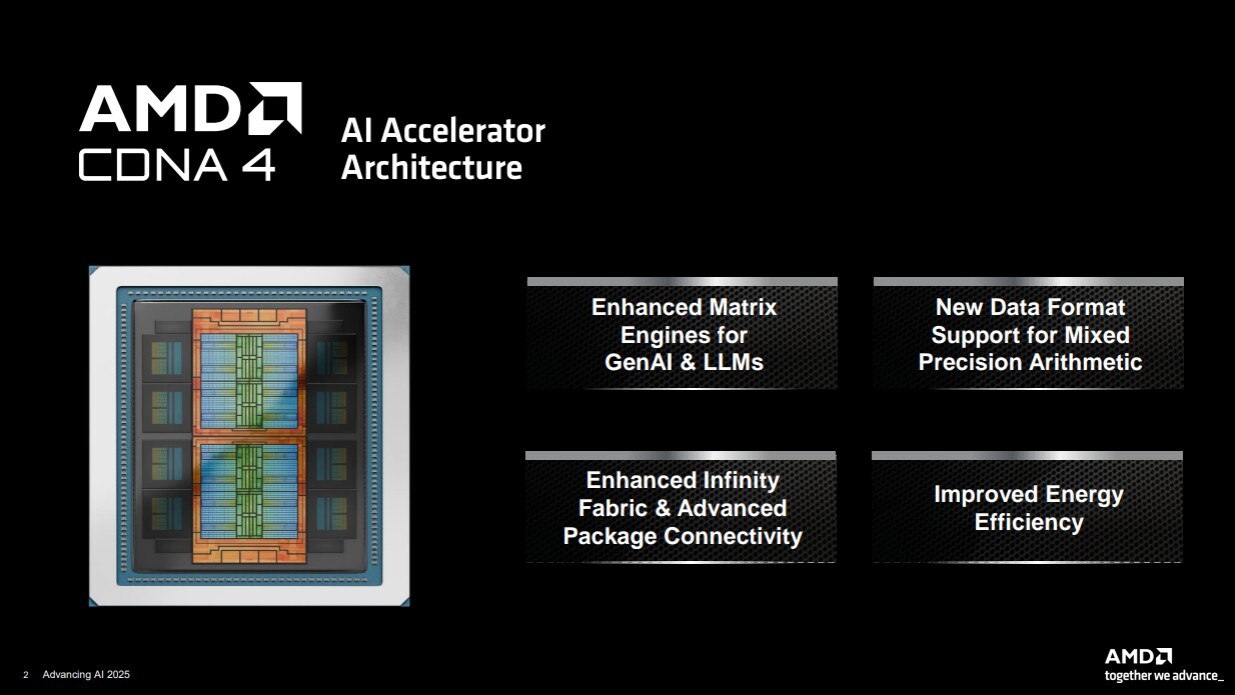

AMD has announced the launch of its new Instinct MI350X series AI GPU. This GPU is based on the company's latest CDNA 4 compute architecture and is designed to rival NVIDIA's B200 "Blackwell" AI GPU series. The top-spec Instinct MI355X was specifically compared to the B200 in AMD's presentation.

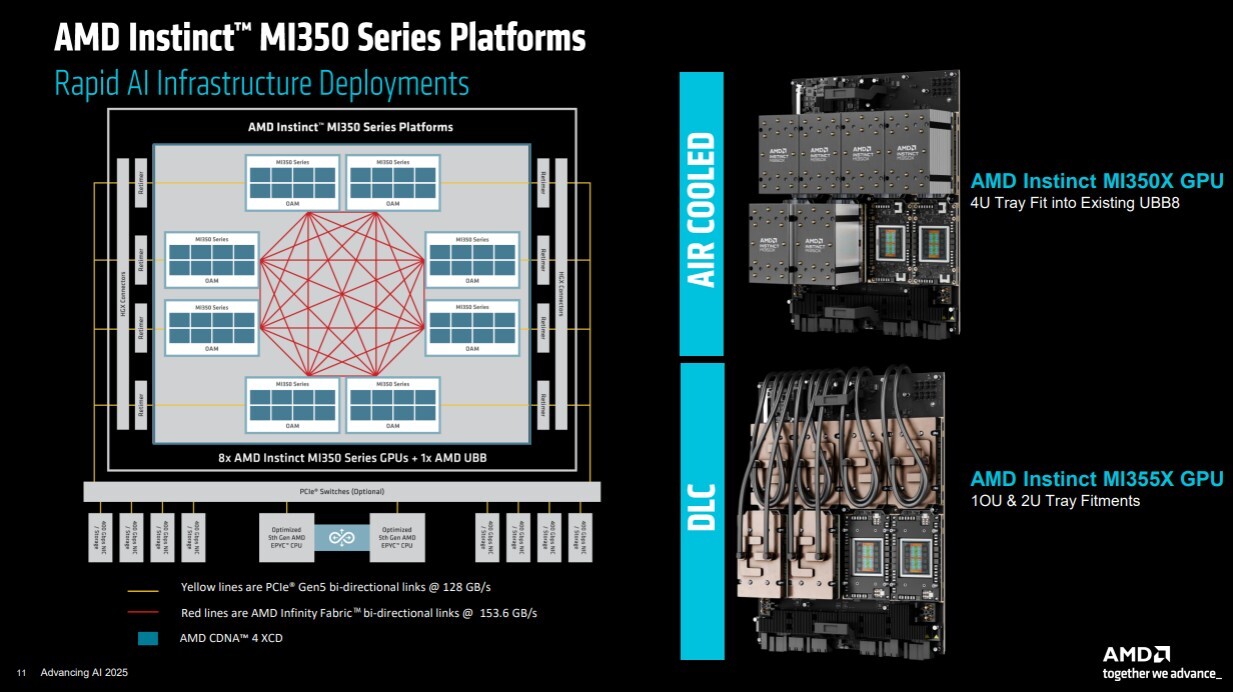

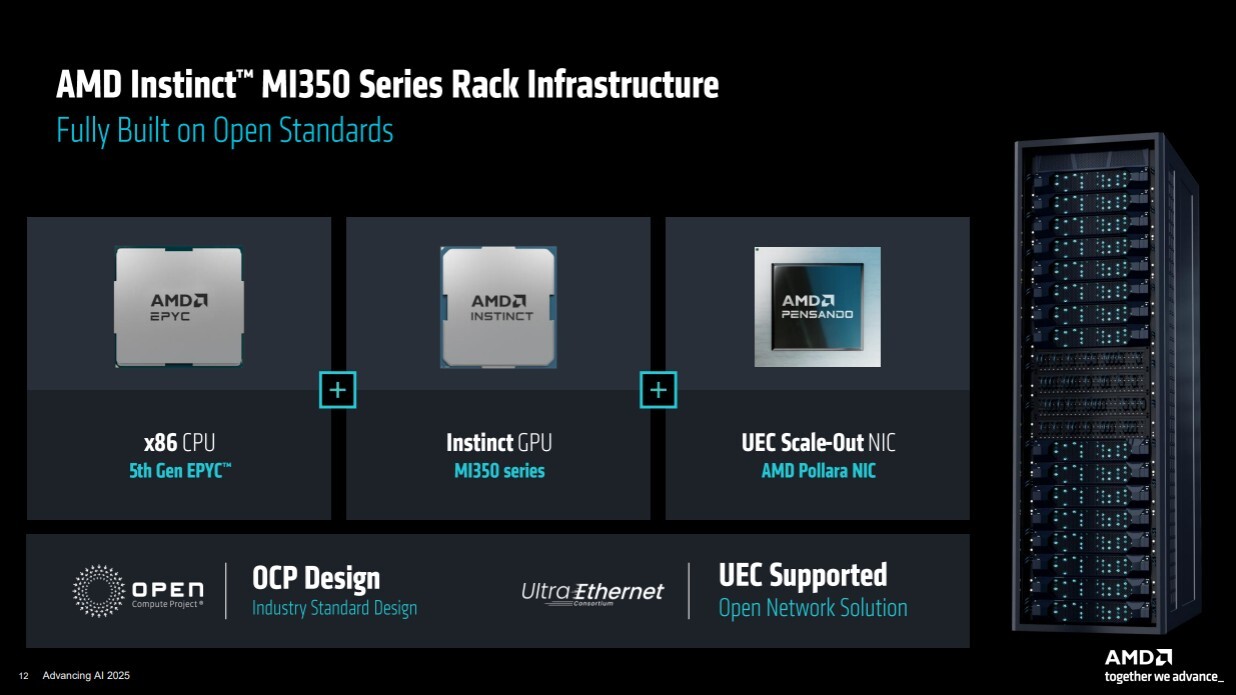

The MI350X chip not only introduces the CDNA 4 architecture but also features the latest ROCm 7 software stack and a hardware ecosystem based on the Open Compute Project specification. This ecosystem includes AMD EPYC Zen 5 CPUs, Instinct MI350 series GPUs, AMD-Pensando Pollara scale-out NICs supporting Ultra-Ethernet, and industry-standard racks and nodes available in both air- and liquid-cooled form-factors.

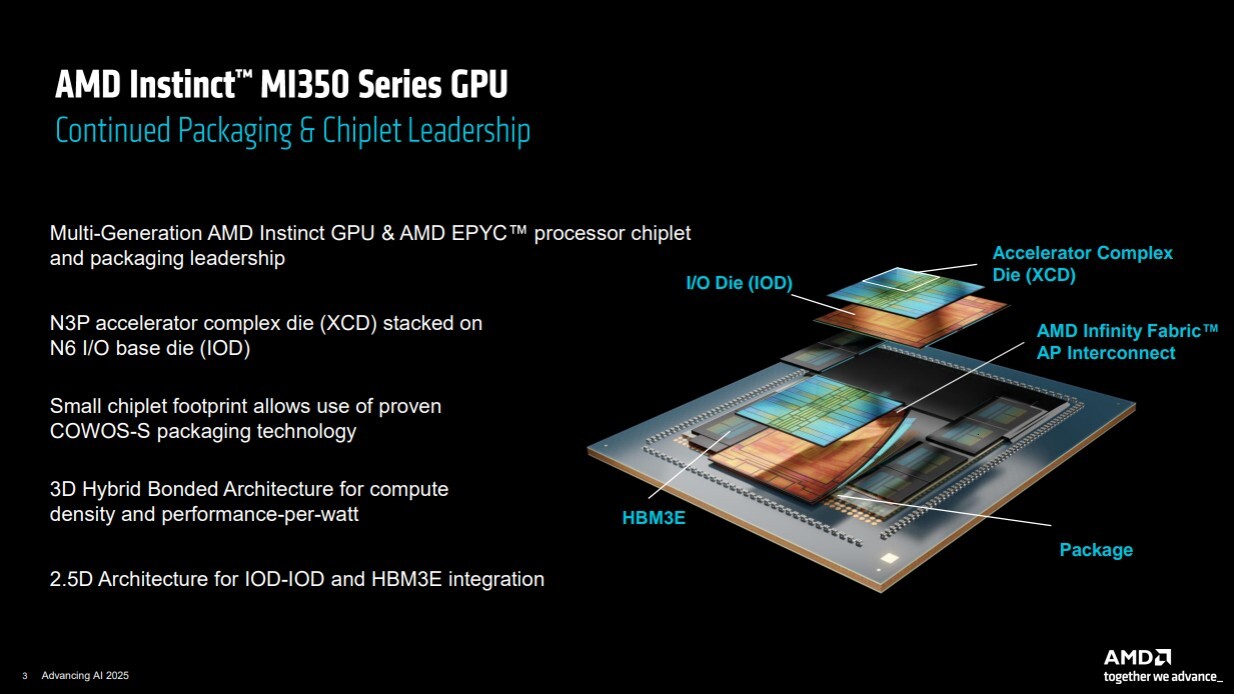

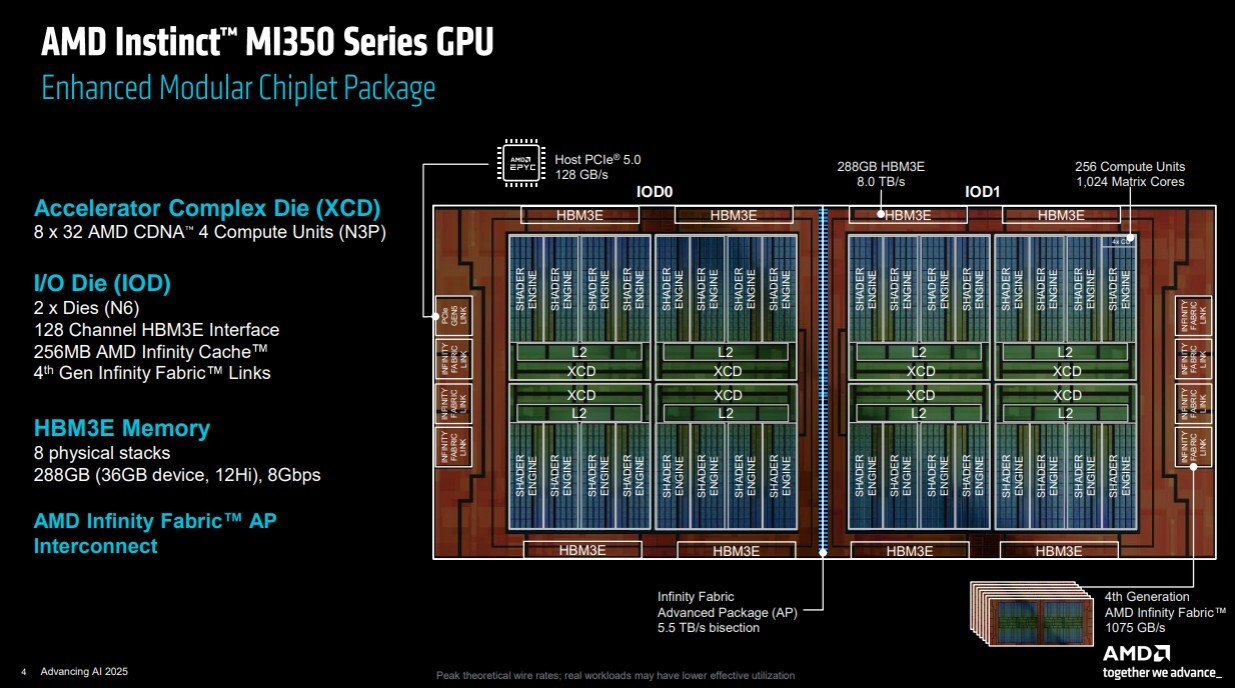

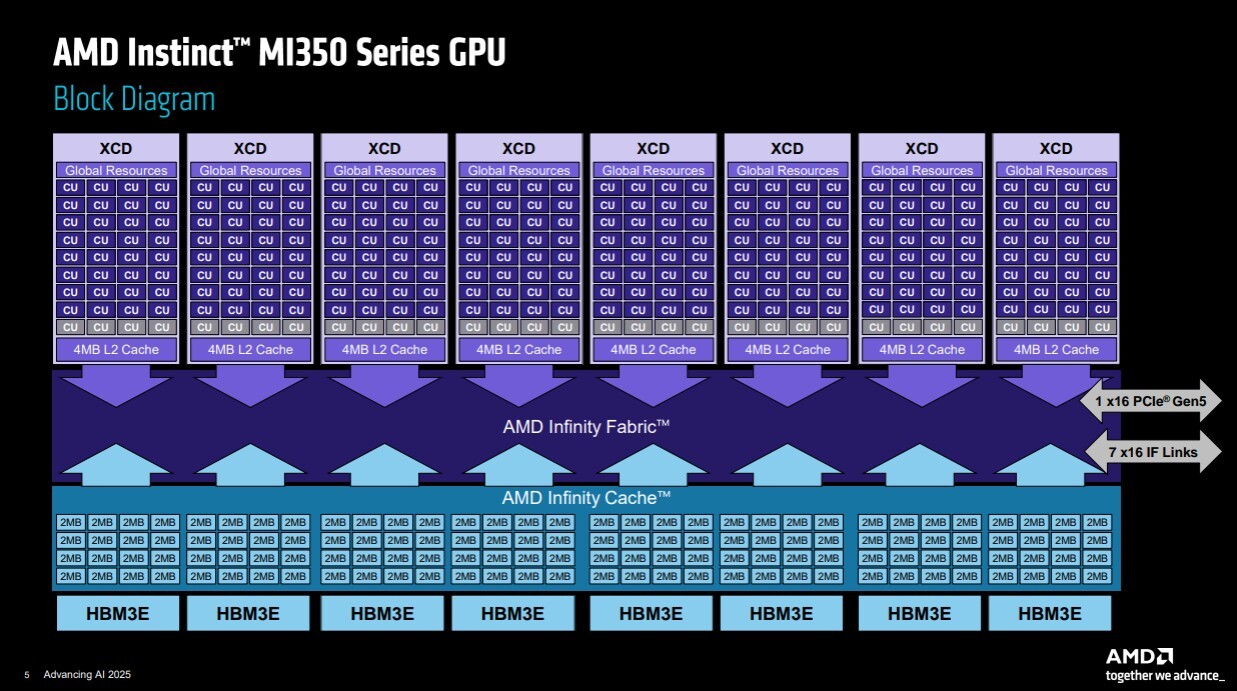

The MI350X is a large chiplet-based AI GPU composed of stacked silicon. It consists of two base tiles known as I/O dies (IODs), each built on the 6 nm TSMC N6 process. These IODs have microscopic wiring for up to four Accelerator Compute Die (XCD) tiles stacked on top, along with other components such as memory controllers, cache memory, interfaces, and a PCI-Express 5.0 x16 root complex.

Each XCD is built on the 3 nm TSMC N3P foundry node and contains a 4 MB L2 cache and four shader engines with 9 compute units each. This results in 36 compute units per XCD and 144 compute units per IOD. The two IODs are connected by a bidirectional interconnect that enables full cache coherency between them, resulting in a total of 288 compute units.

At the platform level, each blade can support up to eight MI350 series GPUs with memory pools enabled across a network of 153.6 GB/s links. Additionally, each package has a PCI-Express 5.0 x16 link to one of the node's EPYC "Turin" processors for handling serial processing.